Introduction#

Every morning I opened my inbox with a familiar feeling: too many messages, too much noise, and zero desire to open Gmail; I needed a way to separate the wheat from the chaff in seconds.

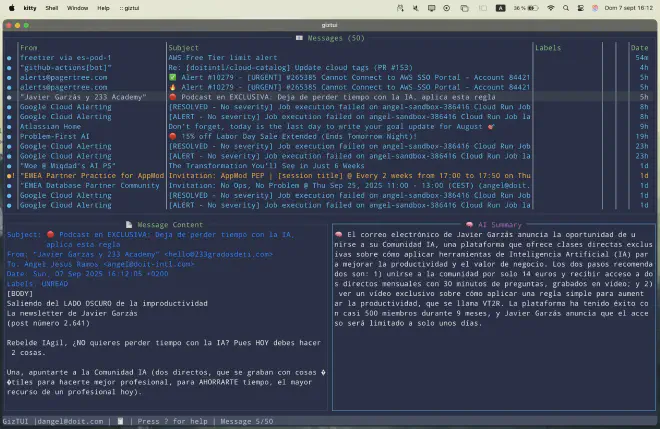

GizTUI was born from a very specific need: manage my email, but above all do it extremely fast, separating the wheat from the chaff in seconds and tailored to my workflow. In my day-to-day I receive much more than I send; my problem is not so much composing emails as consuming information and organizing it without wasting time.

The revolution of AI in programming is not just about speeding up tasks, but about the fact that it has driven development costs into the ground. That changes the rules of the game: you can now afford throwaway development, building tools hyper-personalized to your needs in a matter of hours, and refining on the fly. That combination, minimal costs + extreme personalization, is what truly takes productivity to another level.

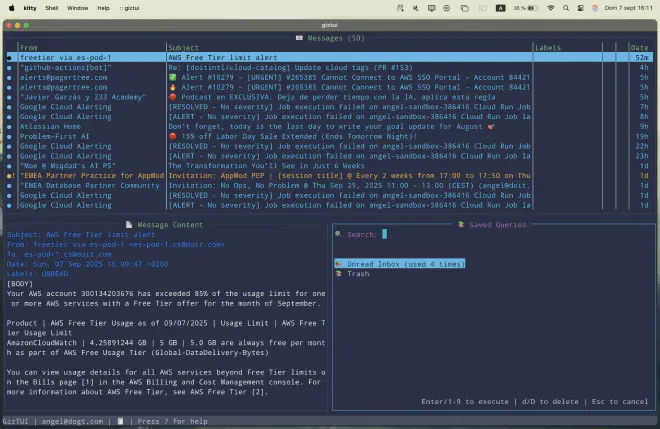

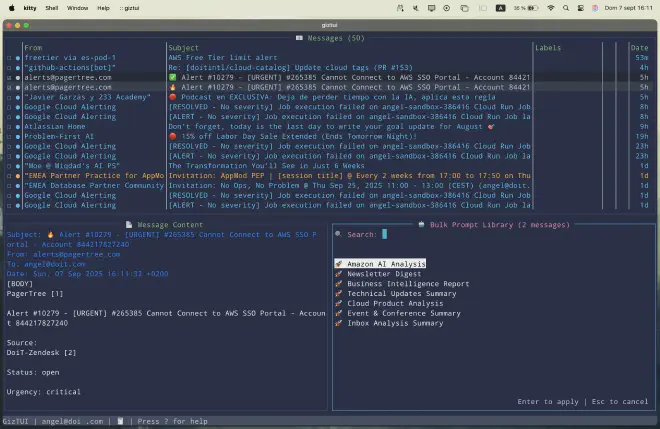

I am not a software engineer. GizTUI is not meant to be a “product” in the strict sense, but an accelerated learning project. Even so, it ended up packing quite a punch: email summaries and on-device AI processing (or other providers like Bedrock), advanced searches with saved queries, agile label management and message moves, Vim-style keyboard shortcuts that multiply speed, and even the ability to respond to calendar invites without leaving the terminal.

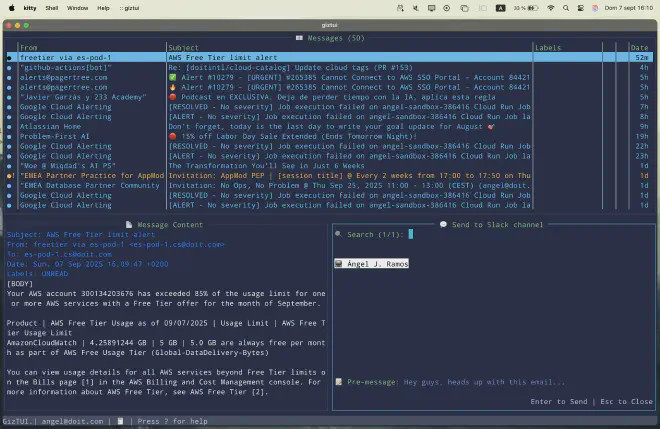

And as the icing on the cake, integrations with Slack and Obsidian, which make the client a natural part of my daily workflow.

Why: why I got into this#

Allow me to give you some context and a few boundary conditions that will help you better understand this project. In my company, the playing field is quite constrained: only the native Gmail client is allowed, IMAP is disabled by Google Workspace admin policies, and classic clients like neomutt or alpine do not work properly with the Gmail API.

On top of that there is a personal factor: I have always been a terminal geek, a big fan of tools like k9s or VIM itself. The idea of having a mail client in the terminal was not just an aesthetic whim, but a way of working that fit me.

And above all, I was looking for a space for practical learning. For me, hands-on learning is the most effective way to absorb new ideas. I wanted a project that was not a trivial toy, but something that solved a real day-to-day problem and forced me to learn in the process. GizTUI ended up being exactly that.

Productivity: iteration that gets you hooked#

This project took me about four weeks of work and ended with more than 400 commits. To put it in perspective: if I had tried to do it on my own, without AI’s help, it would probably have taken me a full year—and that assuming I had the consistency not to abandon it along the way.

The addiction to speed#

That is the first big conclusion: the addictive productivity that AI gives you. You get used to such a high pace of progress that going back feels impossible.

In my case, I noticed it mainly in two areas:

- Vim-style shortcuts applied in bulk mode (e.g., d3d, a5a), which turned repetitive tasks into minimal gestures.

- Direct access to the prompt library, which allowed me to apply summaries, analyses, and chained actions with brutal speed.

Also, during the development process, the AI didn’t just execute: it also asked me good questions. Questions that helped me both understand the problem better and propose more advanced solutions than I would have imagined at first.

UI: the necessary hell#

Not everything was a bed of roses. The UI was tedious: defining interactions, states, and layouts took many iterations, and testing was especially heavy. Here multimodality helped a lot: being able to pass screenshots to the model to point out misalignments, cropping, or unexpected behaviors sped up diagnosis and let me ask for very specific changes (“adjust the background of this panel,” or “look at how this widget is cut off”).

In practice, attaching images to pin down the visual context reduces ambiguity and prevents the model from going off on tangents, especially when the problem is purely presentational. This flow works with both Claude (vision) and other modern multimodal models, and it makes a big difference when you are polishing UX details.

Watch the copilot#

There is also a key point when working with a model: you have to watch it closely. At first, the speed at which it progresses is such that it’s hard to keep up and understand everything it is changing. And in my case, since I’m not a programming guru, that meant accepting certain changes almost on faith.

Of course, when the model faces a challenge it doesn’t know how to solve, the situation reverses: you have to be the one to help it troubleshoot. Fortunately, this wasn’t new to me: in my previous roles, as the person responsible for delivery teams, my daily bread was precisely helping developers understand where problems came from. That experience was key to not getting lost in the model’s dead ends.

Vibe Coding: coding with company#

During the development of GizTUI I mainly used two tools: Cursor and Claude Code. Although, when I was working inside Cursor, I almost always preferred to use it with Claude’s model—at least until I ran out of tokens and had to switch to automatic mode. I also tried OpenAI’s GPT-5, and while it is indeed a bit more “savvy,” the difference didn’t seem spectacular enough to change my main workflows.

Why Cursor? Because it is very well integrated into the IDE and, for other workflows, I find it a tool with a hard-to-beat price-to-performance ratio. With Cursor you can see changes directly in files, approve them, modify them, or revert them in a very natural way. In terms of integrated UX, Cursor still seems a step ahead.

But thanks to a friend’s advice (thanks, Víctor 🙌), I gave Claude Code a chance and it did not disappoint. I quickly realized, though, that the Pro subscription falls short if you want to do anything halfway serious. I eventually moved to the Claude Code Max (€100/month) plan to work continuously. Now I have to decide whether to keep the investment, because I’m not a “hard developer” in the classic sense.

What I liked most about Claude Code was its configuration model with Claude.MD ( documentation), which I find much more intuitive than the scope system Cursor uses with .mdc rules. In my case I even prepared two custom commands that can be seen in the repo:

- feature-implement: with everything it should consider when I ask it to implement a feature.

- feature-debug: with the best practices I collected to debug well.

Regarding rules, both in Cursor and Claude.MD the same thing happens to me: when I make them long or too detailed, consistency suffers. I don’t always manage to have every instruction followed to the letter, which forces iteration.

As a general comparison, my sense is:

- Claude Code: more efficient and consistent for development.

- Cursor: better integrated user experience in the IDE.

Software engineering: discipline in the age of AI#

Building GizTUI reminded me of something fundamental: even when working with AI, separating design from implementation remains key. Before touching a single line, you have to understand the problem space well, define a reasonable solution, and only then move into the implementation phase.

Why? Because in flow mode, if you don’t know what the LLM’s plan is to solve the challenge you’ve put before it, you risk it going off on a tangent and delivering something you don’t need. A concrete example: I had a problem with emojis that took up more space than usual and messed up the screen. The reasonable thing was to replace them with safer ones. The model, however, set out to create a system of validations and functions that unnecessarily complicated the code. Without a plan, the solutions can be a bit overkill.

LLM-assisted planning#

My countermeasure to this was to use AI-assisted planning as a discipline:

- In Cursor, asking the model to explain what it understood and what it was going to do.

- In Claude Code, taking advantage of the planning mode with Shift+TAB.

- For large features, I asked it to write the plan in a file (e.g., FEATURE_X.md) and then validated that nothing was left half-done.

- Along with the plan, I also required a test plan: that way I could put on my QA hat and validate the functionality (more on this in the following paragraphs).

This prevented losing the plot when, between iterations, testing, and fixes, the original context was fading.

Living documentation#

To fight against the arms of chaos, I created a series of reference documents, from ARCHITECTURE.md to TESTING.md or KNOWN_ISSUES.md, which served as a record of architecture decisions. Although the AI did not always take them into account (forgetting curves), they served as living documentation and as an anchor point for later refactors.

TODO.md as the project’s pulse#

A habit that proved key for me was maintaining a TODO.md. It wasn’t just a task list, but a kind of living roadmap: what I wanted, what was missing, what I shelved. That file served as the project’s compass and gave me clarity in the midst of the natural chaos of AI-assisted development.

Commits and branches#

Another habit that became essential: semantic commits and one branch per feature. I prefer to commit with each chunk of code and then iterate. Otherwise, in the middle of troubleshooting you can dirty the code and, when rolling back, you no longer have a clean state. With small, reversible commits, I was able to get back on the right track without drama.

Refactoring and technical debt#

From time to time you have to take a step back and ask the model to re-analyze the repository to detect improvements and accumulated technical debt. Toward the end of the project, I reinforced this with specialized agents in Claude, each with a specific role:

- architecture-validator: reviews architecture and separation of responsibilities.

- config-consistency-maintainer: ensures consistency between configs, docs, and examples.

- feature-documentation-validator: verifies that each feature is well documented.

- feature-parity-validator: checks parity between keyboard shortcuts and commands.

- test-generator: generates complete test suites (unit, integration, edge cases).

Test plan#

Tests hurt, both to write and to maintain. But integrating them from the feature definition (using my own feature-implement and feature-debug commands) made the difference. The functional test plan, along with unit tests, prevented silly regressions in critical areas like threading or shortcuts.

Simplifying vs overcomplicating#

LLMs tend to extremes:

- Sometimes they over-simplify: I remember when, stuck with a linter issue in a release, the model directly suggested disabling them.

- Other times they over-complicate: as with the emojis, creating unnecessary architectures for a simple problem.

The challenge is to find the balance: constantly validate that the solution is proportional to the problem.

Prompt & Context Engineering: beyond the prompt#

One of the biggest learnings from GizTUI is that writing good prompts is not enough: you have to be intentional with the context in which you use them. Some call it context priming or context crafting.

In practice, this means preparing the model with the right information before asking it for anything. In my case, a recurring technique was something as simple as:

“Explain how feature X works. Now I want something similar, but that also does Y and Z.”

That kind of preparation gave the model a frame of reference and prevented it from reinventing the wheel every time.

This, combined with two more pillars, allowed me to maintain coherence:

- Prompt engineering: through custom commands like feature-implement or feature-debug, which encapsulated best practices and a checklist of what could not be missing.

- Documentary context priming: using architecture decision docs as a living reminder (even though the model didn’t always have them present, they were a guide to consistency to return to during refactors).

The second derivative of all this is that you have to do active management of the context window. Long sessions end up stretching the model’s memory window and it starts forgetting important details. That’s where Claude’s compact command comes in: it basically lets you summarize the current state of the conversation and refresh the context before continuing. I learned that the most effective approach is not to wait for it to launch automatically, but to use it proactively when you see the thread inflating too much.

In short: prompts are the spark, but context is the wood that keeps the fire going. And if you don’t take care of that wood, the model drifts, forgets, or reinvents.

Conclusions and reflections#

The first conclusion is obvious: AI changes the game. The cost of building is practically nil compared to a few years ago. That means what is truly scarce is not the ability to code, but your ability to decide where to focus.

The second is that we are entering the era of hyper-personalization and disposable code. Just as we now have throwaway Kubernetes clusters, we can also afford throwaway applications. Custom prototypes, built in hours or days, that solve a very specific problem, fit like a glove, and then disappear. That lets you iterate faster, learn more quickly, and adapt nimbly to a changing environment where you often don’t even know the whole problem at the start.

The third conclusion is that old habits still hold. Models are not deterministic: they will make mistakes, they will drift, and they will forget what they were doing. That is why setting guardrails is key. I compare it to Ulysses tying himself to the mast to resist the sirens’ song: you need discipline not to be carried away by “brilliant solutions” you don’t actually want. Throughout the article I have shared practices that have worked as those guardrails for me: planning, a test plan, small commits, living documentation…

The fourth is more personal: I would do it again without hesitation. GizTUI did not remain a lab experiment; it is a tool I already use in my day-to-day. It gives me real productivity gains and, above all, frees me from the opportunity cost that email used to eat up. I now devote that time and energy to tasks where I add more value to my company and become more competitive. Win–win.

Would I do it the same way? Of course not. With everything I’ve learned, today I would design some parts differently. But that is precisely the essence of this kind of project: build, learn, refine.

If you want to see the full list of features that came out of this process, it’s documented in detail in the GizTUI repository itself GitHub Repo.

I’d love to hear what you think or what other ideas come to mind.

My invitation is simple: dare to experiment.

Today AI gives us the ability to create hyper-personalized tools at a speed and cost unthinkable a few years ago. You don’t need to be an expert engineer to start; you just need a real problem and the desire to learn.